Diving deeper into the realm of generative AI, I've come across several articles that I find interesting as a beginner in this field:

The article - Understanding Ghost Attention in LLaMa 2 - delves deep into the technique of the ghost attention technique in LLaMa 2.

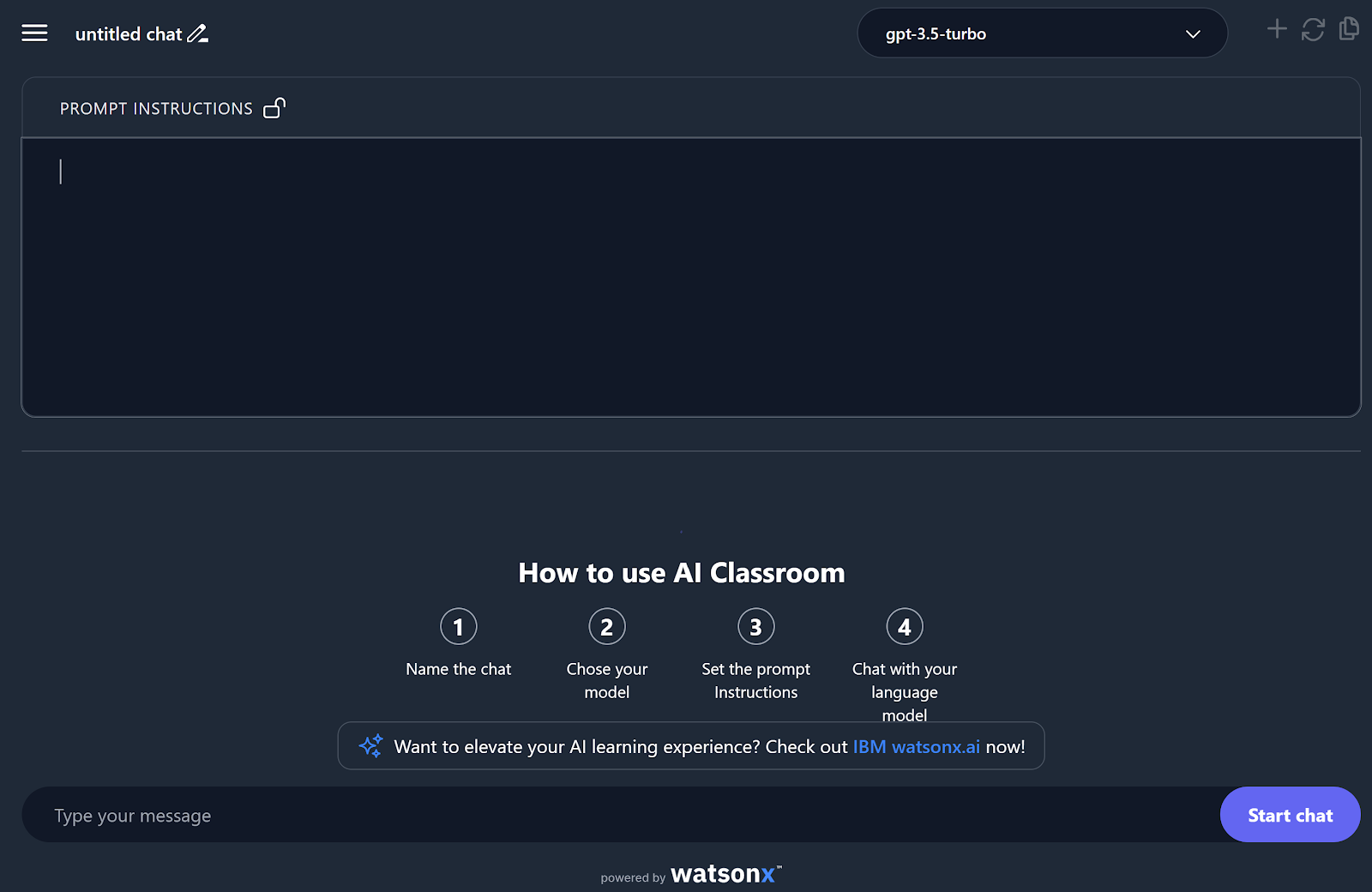

One example of providing instructions for specific chat is Prompt Instructions in Watsonx IBM service:

You define instructions in the upper input and then start to chat below.

One detailed look into how generative AI works is this article exploring the differences between "Chain of thoughts" and "Tree of thoughts" - Chain of Thoughts vs Tree of Thoughts for Language Learning Models (LLMs)

How to work better with these systems? You can improve the output using prompt patterns or n-shot prompting - 7 Prompt Patterns You Should Know

For controlling grounding data used by an LLM and constraining it for your enterprise Gen AI solutions, consider using Retrieval Augmented Generation (RAG). You can see how to use it, for example in Azure, here - Retrieval Augmented Generation (RAG) in Azure AI Search

Additionally, to gain more from LLMs, you can explore architecture patterns and mental models as described here - Generative AI Design Patterns: A Comprehensive Guide

No comments:

Post a Comment